Shaping Architectural Work Together

How DB Systel achieved more clarity, participation and quality with LASR

DB Systel: Digitalization for the Entire Group

One of these teams is developing a web-based information system that will be used in DB Cargo’s European freight transport operations. The system consolidates data from various sources, assists dispatchers in planning and managing transports, and plays a crucial role in ensuring operational transparency within a highly complex environment. This case study explores the team’s experience with LASR, from the initial contact to the retrospective evaluation of the first application.

Starting Point

High Responsibility - Limited Shared Understanding of Architecture

After over three years of development, our system had expanded significantly: six services, numerous interfaces, and ongoing functional enhancements. As a result, certain pockets of expertise emerged, with only a few colleagues having a comprehensive understanding of the overall system and its architectural framework. While the team is technically proficient, communication has been somewhat reserved. This was compounded by several years of remote work, which made informal conversations and spontaneous architecture discussions more challenging. Within the team, feedback on the software’s quality became increasingly critical, though often vague.

We were therefore looking for a method that would allow us to address both quality and architectural concerns collaboratively while actively involving all team members. LASR – the Lightweight Approach for Software Reviews – appeared to be the structured yet accessible format we needed to bring architectural topics into the team’s discussions.

LASR Fact Sheet

Participants:

8 persons

Organizational Level:

Team

Time Spent:

- Understand what makes you special (Steps 1-2): 60min

- Explore your Architecture (Steps 3-4): 90min + 90min

- Extra Times: 30min TODOs and next steps

Results:

- Identified Risks: 8

- Biggest Gaps: Maintainability, Functional Suitability

Our LASR Experience

Step 1 - Lean Mission Statement

Step 2 - Evaluation Criteria

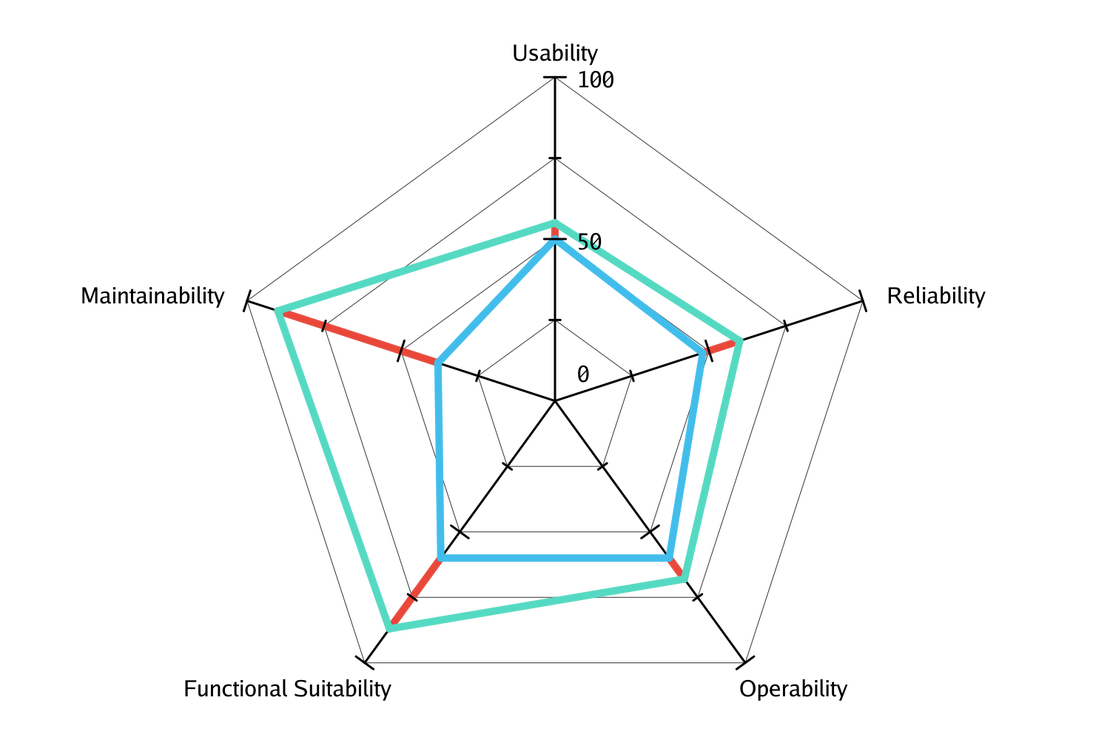

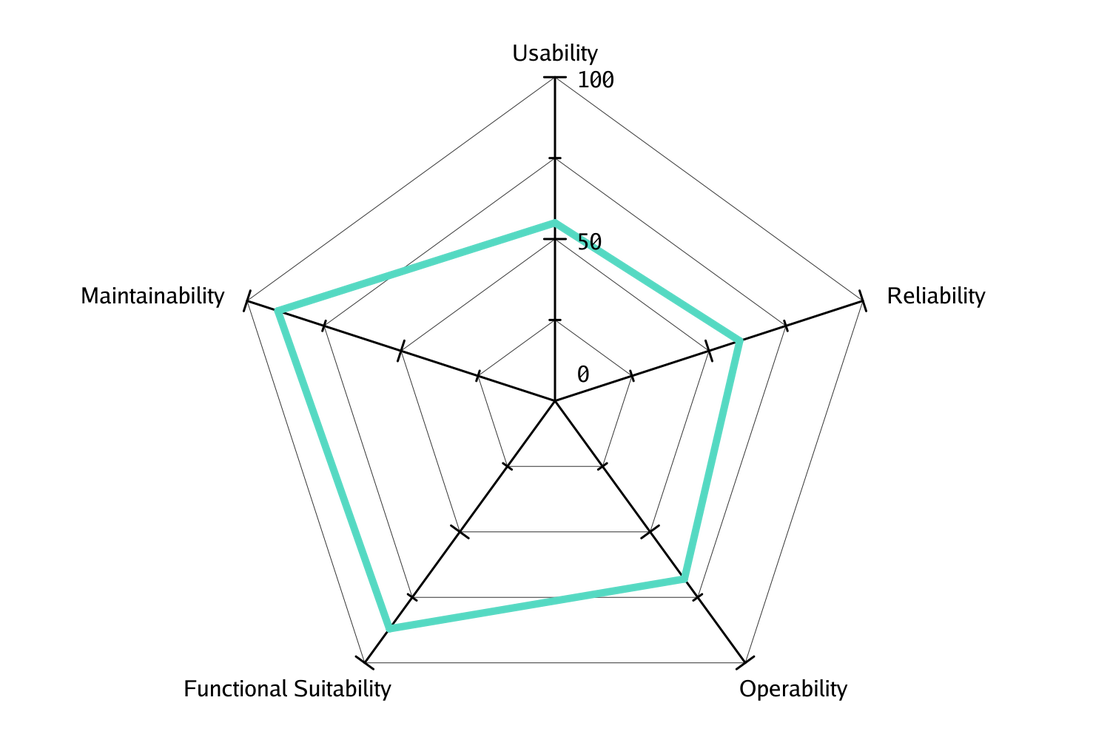

The five quality goals we determined for our evaluation criteria were usability, reliability, operability, functional suitability, and maintainability. We also wrote a few key points on post-its to document the reasons behind our selection of these criteria. The spider web diagram illustrates these goals alongside their relevant target values.

Step 3 - Risk-based Review

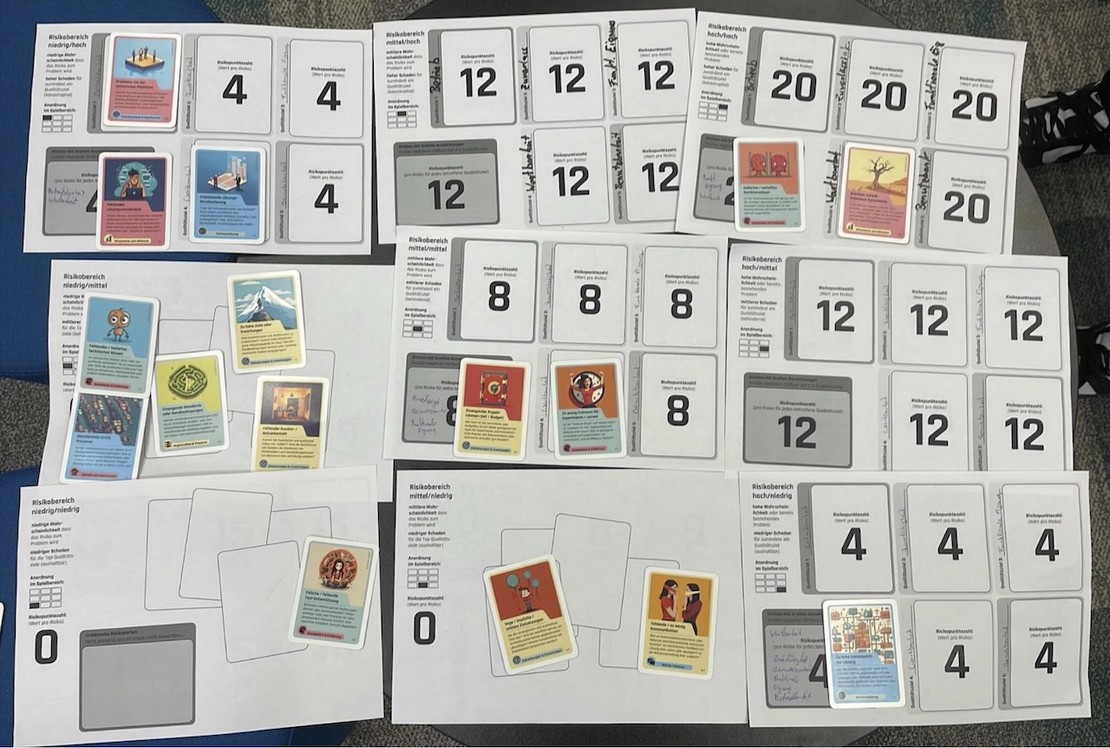

The rest of the risk-based review followed the standard procedure. Below is a photo from the workshop. A quick tip: we had a table that was slightly too small—the minimum size should be 1x1m, and a little extra space for writing post-its would certainly be helpful.

We set timeboxes of 3-5 minutes for classifying each risk (based on impact and likelihood) and also took notes during the process. The resulting diagram can be seen in the factsheet, where we identified significant gaps in maintainability and functional suitability.

Step 4 - Quality-focused Analysis

What Has Come of It?

We are still working with the list of strengths and weaknesses that we initially developed during the LASR workshop.

Insights and Tips

Rhythm:

After all, software is a living product. We plan to hold a LASR workshop once a year moving forward, slightly shortened each time, as everyone is already familiar with the method.

Playing Cards:

The cards worked really well. Even participants who are typically reserved were motivated to contribute by the vibrant illustrations and the tactile experience. The Top-5 Challenger game mechanism also engaged everyone effectively and sparked new ideas. It was the perfect fit for our starting point.

Preparation Times:

We allocated about 10 minutes of preparation time or buffer for each step. During this time, we introduced the steps and extended the timeboxes when necessary. It was extremely valuable for us to take the time to thoroughly discuss important findings.

Result:

Although the result after our half-day workshop was somewhat preliminary, the key risk topics were identified, allowing us to work on the details during our quality sprints. LASR provided us with plenty of food for thought!

"The key risk topics were identified, allowing us to work on the details during our quality sprints."

Alina Stürck

Business Engineer